Binary recompilation is a subject of intense research, but for mere mortals, recompiling binary code or executables can seem completely off-limits.

In my case, I was working on an open server implementation for Cube World, a game with procedurally generated terrain. Reverse-engineering the network protocol turned out to be a simple task, but to actually manipulate or do anything in the game world, we needed data about the terrain.

Unfortunately, Cube World does not save anything about the terrain to disk. Based on a seed, it generates the terrain on the fly, and does not relay anything about it to the client.

After some preliminary reverse-engineering of the terrain generator, it was clear that extracting the logic would take months. Initially, this was a big setback, but then the idea of somehow using the original x86 machine code presented itself.

However, for our open server, we need to support x86-64 as well, and in that case, we absolutely need emulation or recompilation.

Using something like QEMU or Bochs to emulate x86 seemed like a good idea, but they are both very big projects, and having them as a dependency would probably be a showstopper for anyone wanting to use our project. The memory overhead of a JIT would also not be very attractive, and it would be hard to make any guarantees about performance.

Static recompilation to assembler seemed like a much better option, but to keep it portable, we would need to write backends for x86, x86-64, and possibly ARM/PowerPC.

An idea

What about C++ as a target language? The x86 instruction set is a bit bloated, but most instructions map to simple C++ code (except for the condition flags, but we will get to that).

(LLVM as a target could also have been used, but it’s a big dependency, and would most likely not perform better than MSVC/GCC/Clang)

To make things easier for us, we use Python to transform the code from x86 to C++. For reference, you can take a look at the converter source.

The PE executable format: a primer

In our case, our source executable is an x86 win32 PE file. Using pefile.py, we can quickly get the data we need.

For anyone not familiar with PE files, they consist of

- Header

- Image base (usually

0x400000for executables) - Entry point

- Image base (usually

- Sections

.text(x86 code).data(read/write data).rdata(read-only data).reloc(relocation table)

- Import tables

- Other irrelevant junk

The code for reading in the PE file could look as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 | |

Without relocation, the executable depends on its data to start at the

image base. The code in .text references absolute addresses in

virtual memory, which is obviously a problem when recompiling. However, using

the relocation table, we can detect when an address is being referenced, and

deal with it automatically.

The entry point is not the main function, but rather a wrapper called

_start. This sets up the MSVCR library and calls static class

constructors, then fires main. For simplicity, we will start conversion at

the entry point.

Since the original executable depends on both the .data and .rdata

sections, we will convert them to header files using char* arrays, i.e.

1 2 3 4 5 6 7 | |

Doing this conversion by hand would be very tiresome, so doing it in code is more ideal.

The import tables in PE are used to import functions from DLLs, but in our case, we want to rewrite the calls to any imports to our own wrapper functions. In our converter, we can detect when an import is being called using the import map.

Disassembling x86

The x86 instruction set is huge, but luckily, something like pydasm can parse x86 neatly, giving us decoded instructions and operands. The decoding loop could look something like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | |

Detecting the end of a subroutine is quite tricky. Instructions like

retn, ret, int3 and the far version of jmp usually denote the end of

a subroutine, but YMMV. Subroutines that raise exceptions (like

std::length_error) also cause the subroutine to end, but special checks

need to be added in that case.

Usually, it would be a good idea to disassemble into basic blocks, but it turns out naively running through instructions worked in this case.

What if a subroutine makes a relative jump (using e.g. jnz) into the

subroutine, then returns with ret? For example:

1 2 3 4 5 6 7 | |

We need to add some code to handle this case:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | |

When an instruction makes a relative jump, we add a ‘label’ at the destination address (this will later correspond to a C++ label). If we still haven’t reached the last label in the current subroutine, we keep disassembling.

This turns out to work for the majority of cases.

Handling condition flags

x86 has a lot of instructions that change the condition flags, also called EFLAGS. The ones relevant for our executable are

- Overflow flag (OF)

- Carry flag (CF)

- Zero flag (ZF)

- Sign flag (SF)

- Direction flag (DF)

The rest are never referenced or used, so for performance, we ignore them completely. Also, DF is never changed, so we set its value to false.

If we take the add instruction, the OF, CF, ZF and SF flags are changed as

follows (presented as C++):

1 2 3 4 5 6 7 8 9 10 11 | |

Even though we can hope the optimizer will eliminate unnecessary code, it is much better to calculate the EFLAGS only when necessary. Both Bochs and QEMU use a technique called ‘lazy EFLAGS’, where they save the operands and calculate the flags when necessary. However, if we search and find the EFLAGS dependencies for each instruction, we can do even better.

Take this piece of code, for example:

1 2 3 4 5 6 7 | |

It is simple to recognize that the jae instruction depends on the add

instruction, so we add the jae instruction as a dependee to the add

instruction. When the converter hits the add instruction, it will then

calculate condition values for all dependees and save them as bools.

As an example, we can transform the above x86 to

1 2 3 4 5 6 7 8 9 10 11 12 | |

This will most certainly be optimized away in the compiler, and should produce

the original add and jae sequence.

The runtime library

Before we can have the converter convert our x86 into C++, we need a runtime library as our foundation.

In this case, we use two classes, CPU and Memory, and instantiate a

global object of each.

Our CPU class could look as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 | |

This provides us with the means of accessing 32bit, 16bit and 8bit registers, and to push/pop stack arguments on/off the stack.

We are leaving out SSE and FPU registers here, but if you want to see a full implementation, look here.

Our Memory class could look as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | |

Again, the full implementation can be found here.

Heap allocation would need a longer write-up, but using something like dlmalloc, we can create a simple interface to heap allocation in a static array.

Because our addresses are limited to 32 bits, in case we are on a 64bit system,

we need to have a way of translating 32 bit addresses to 64 bit (and the other

way around). This is what the translate functions are for.

A good way of doing the translation is to use e.g. the stack as a base,

and have everything offset from that. In that case, the translate would

look as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | |

This turns out to be very efficient on x86-64 with RIP-relative addressing, as some of offsets can be inlined when dealing with the data/rdata sections.

The converter

Now that we have a runtime library and can disassemble subroutines, how do we convert our executable to C++?

Our main subroutine loop could look as follows:

1 2 3 4 5 6 7 | |

Our ´CPU´ object will then add new functions to the function stack as new functions are called and discovered.

The CPU class could look as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | |

The class has been abbreviated for clarity, but with everything implemented, you would end up with something like the following:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 | |

Using the relocation table

At the moment, if our code tries to access the rdata or data sections, we will

get a segfault since the code will expect the data to be located according to

the image base. However, since we do have a relocation table, we can detect

when a data/rdata access is being made, and point it directly to our

rdata_section and data_section tables.

This would transform something like this:

1

| |

to this:

1

| |

When we detect a relocatable address, we traverse the section list, and determine which section is being accessed and the offset into it, and use that instead of the original offset.

Handling dynamic calls

Even though we can resolve calls to direct addresses, sometimes,

we will meet instructions like call [eax]. There is no good way to resolve

this statically, so we will have to maintain a list of dynamic subroutine

addresses in the converter. This is one of the unfortunate consequences of

doing static recompilation, but since our target executable is not bound to

change much between releases, it is bearable to update our dynamic subroutine

list each time. For our target, there are only 7 dynamic addresses, so it’s not

a major problem anyway.

In the runtime library, we set up a std::unordered_map with

address/function pointer pairs, so when we get a dynamic call, we just look up

the function in the map.

Handling imports and replacing subroutines

There would be two ways to handle imports:

- Follow import calls, and also convert subroutines in external DLLs

- Write the imported subroutines manually

The MSVCR runtime uses a lot of exotic instructions, so chances are it’s a lot easier to just manually rewrite any imported subroutines from scratch. This way, we can also escape the emulated layer as much as possible, by using the native equivalent of whatever the MSVCR library is doing.

This does add some extra work when MSVCR C++ routines are called (for e.g.

std::cout, std::stringstream, etc.), where the structure of the classes are

implementation-specific. However, if we are clever, we can ignore any

initialization-routines and just write a pointer to our native equivalent in

the start of the guest structure, and so on.

With some work, we can also detect the usage of statically-linked libraries and insert our native equivalent. In this case, the SQLite library was used, so instead of letting the converter run through the SQLite subroutines, we added SQLite to the runtime library and forced the converter to use our handwritten subroutines.

For hot subroutines, it is also ideal to properly reverse-engineer them to

C++ so the effect of the emulation layer isn’t as prevalent. In some cases,

we can even optimize the subroutine further by adding optimizations the

original developer didn’t (by e.g. caching cos values and so on).

Putting it all together

To actually make use of our generated C++ code, we create a simple interface which calls the subroutines related to the terrain generator and returns the terrain data we want. We then compile the generated code and interface into a dynamic library.

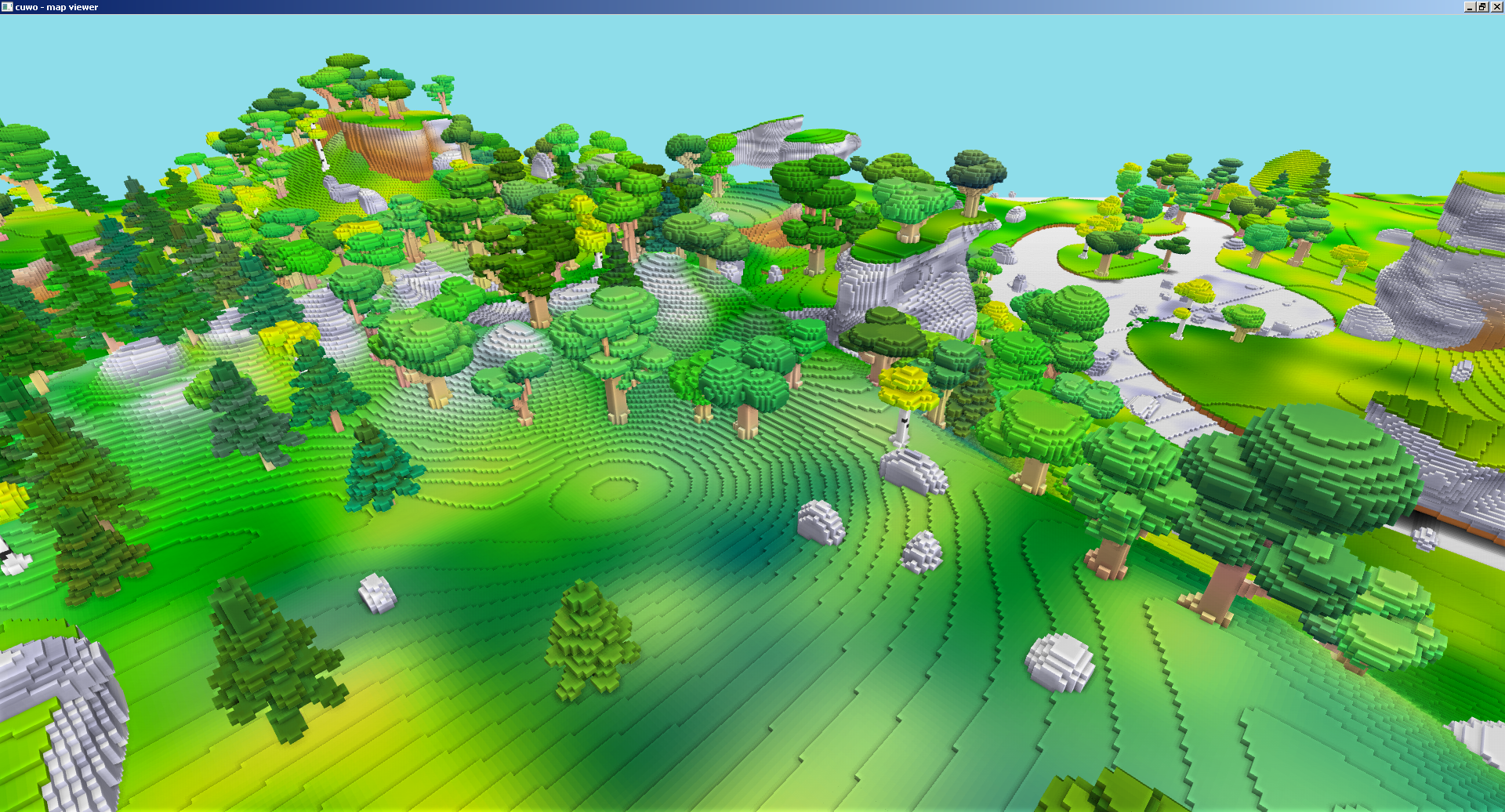

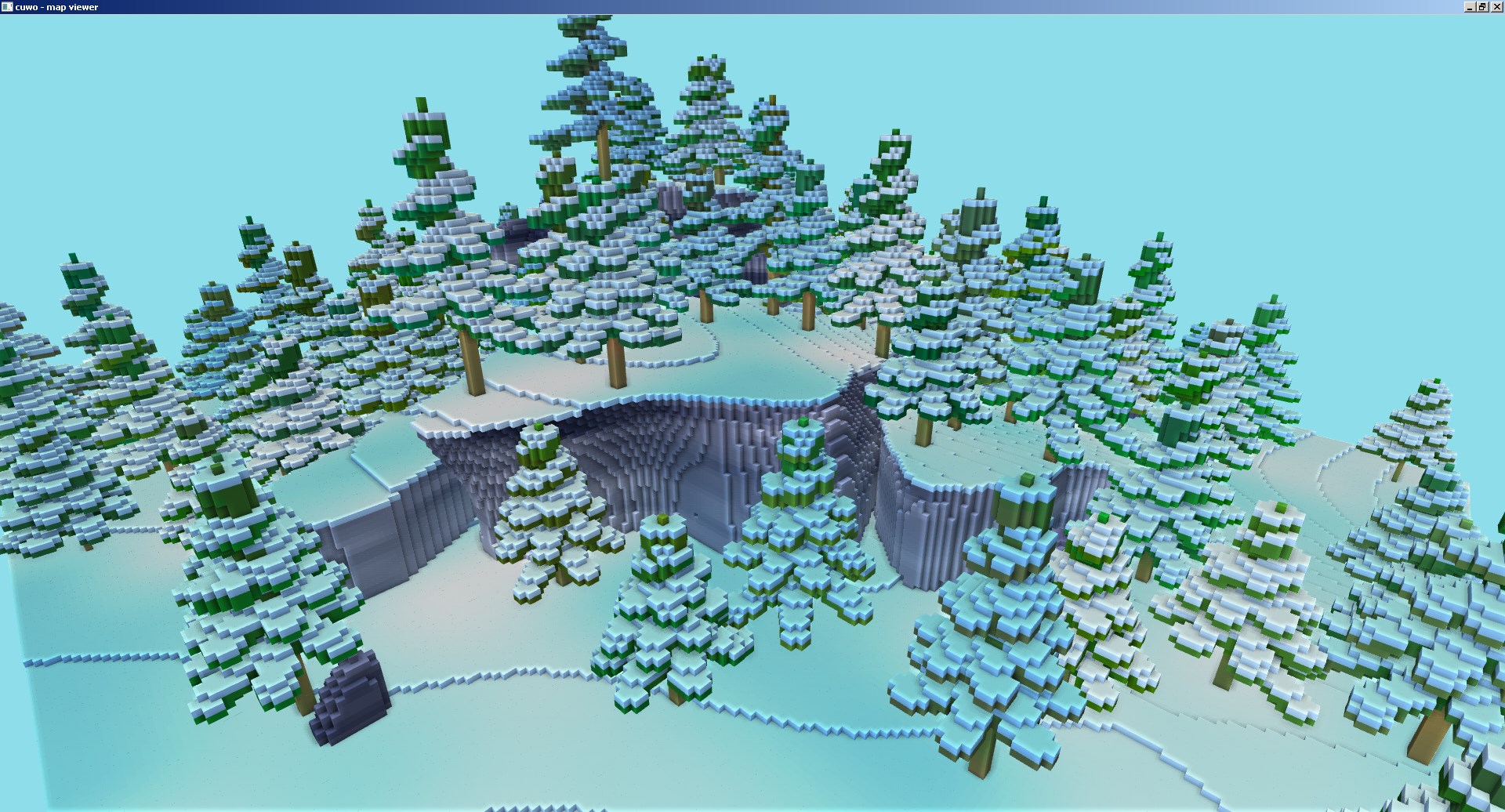

Here are some screenshots that show off the terrain data, generated by the converted x86 code:

Future research

Some things to look into:

Benchmarking

Since we don’t have control over the original code, it is hard to create a benchmark to test the performance of our C++ solution against the target executable. We could try and attach a debugger to the executable, and log the function arguments and execution times, but the debugger may add some overhead to the execution times.

Abstract/physical stacks

Our converter currently doesn’t care how the original code handles the stack. It lets it handle the EBP/ESP registers however it wants. Ideally, we should analyze the code, and figure out how big the stack frame for the current subroutine is. This would allow us to change all EBP/ESP-offset references to stack references. This gets rid of the EBP/ESP registers, but it would also potentially allow the optimizer to make better decisions about stack variables.

Pass arguments directly

Sort of related to the above, we could try and derive the stack arguments to a routine and pass them directly as arguments to the C++ function.

Remove global register variables

Since we use global variables for our registers, the optimizer will be forced to write all the changed registers back to the register variables at the end of the function. If we instead pass the registers as arguments to the function, at least on x86-64, the arguments would be passed as registers.

Direct x86/x86-64 backends

Ultimately, the fastest solution would be converting to x86/x86-64 assembler directly. However, we would lose some of the optimizations that come with MSVC/GCC, but it’s questionable whether the optimizations are enough to outweigh direct translation. It would be much harder to debug and maintain these backends though, so perhaps it’s better to keep optimizing the hot routines and stick with C++.